Lattice Structure Optimization |

|

|

|

|

|

Lattice Structure Optimization |

|

|

|

|

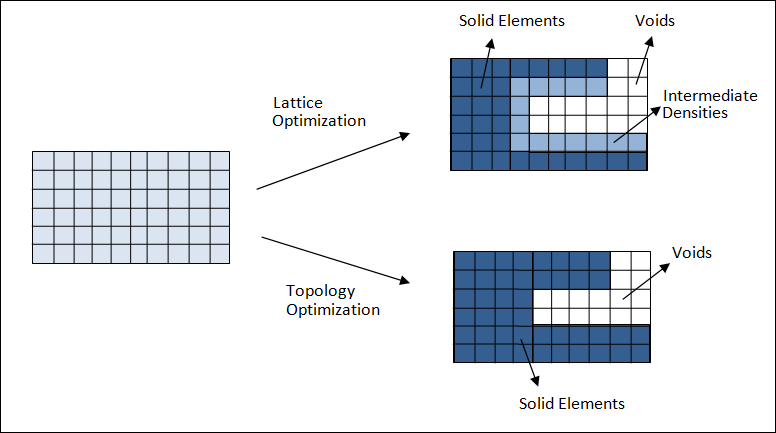

Lattice Structure Optimization is a novel solution to create blended Solid and Lattice structures from concept to detailed final design. This technology is developed in particular to assist design innovation for additive layer manufacturing (3D printing). The solution is achieved through two optimization phases. Phase I carries out classic Topology Optimization, albeit reduced penalty options are provided to allow more porous material with intermediate density to exist. Phase II transforms porous zones from Phase I into explicit lattice structure. Then lattice member dimensions are optimized in the second phase, typically with detailed constraints on stress, displacements, etc. The final result is a structure blended with solid parts and lattice zones of varying material volume. For this release two types of lattice cell layout are offered: tetrahedron and pyramid/diamond cells derived from tetrahedral and hexahedral meshes, respectively. For this release the lattice cell size is directly related to the mesh size in the model.

Lattice Structure Optimization is initially similar to topology optimization; however, design domains can now include elements with intermediate densities. Theoretically, from a physical point of view, such structures can be more efficient compared to those in which design elements are penalized to densities of 0 or 1.

Figure 1: Difference between Lattice Optimization (Phase 1) and Topology Optimization.

A possible major application of Lattice Structure Optimization is Additive Layer Manufacturing which can take advantage of the intricate lattice representation of the intermediate densities. This can lead to more efficient structures as compared to blocky structures, which require more material to sustain similar loading.

It should be noted that typically porous material represented by periodic lattice structures exhibits lower stiffness per volume unit compared to fully dense material. For tetrahedron and diamond lattice cells, the homogenized Young's modulus to density relationship is approximately given as ![]() , where E0 specifies Young's modulus of the dense material. Varying levels of lattice/porous domains in topology results are controlled by the parameter POROSITY. With POROSITY defined as LOW, the natural penalty of 1.8 is applied, which would typically lead to a final design with mostly fully dense materials distribution (or voids) if a simple 'stiffest structure' formulation (compliance minimization for a given target volume) is applied. However, you may favor higher proportion of lattice zones in the design for considerations other than stiffness. These can include considerations for buckling behavior, thermal performance, dynamic characteristics, and so on. Also, for applications such as biomedical implants porosity of the component can be an important functional requirement. For such requirements, there are two different options for POROSITY. At HIGH, no penalty is applied to Young's modulus to density relationship, typically resulting in a high portion of lattice zones in the final results of Phase I. At MED, a reduced penalty of 1.25 is applied for a medium level of preference for lattice presence.

, where E0 specifies Young's modulus of the dense material. Varying levels of lattice/porous domains in topology results are controlled by the parameter POROSITY. With POROSITY defined as LOW, the natural penalty of 1.8 is applied, which would typically lead to a final design with mostly fully dense materials distribution (or voids) if a simple 'stiffest structure' formulation (compliance minimization for a given target volume) is applied. However, you may favor higher proportion of lattice zones in the design for considerations other than stiffness. These can include considerations for buckling behavior, thermal performance, dynamic characteristics, and so on. Also, for applications such as biomedical implants porosity of the component can be an important functional requirement. For such requirements, there are two different options for POROSITY. At HIGH, no penalty is applied to Young's modulus to density relationship, typically resulting in a high portion of lattice zones in the final results of Phase I. At MED, a reduced penalty of 1.25 is applied for a medium level of preference for lattice presence.

Design constraints can be defined in both Phase I and II of the Lattice Optimization process. Some specific constraints, like stresses (via LATSTR) are not applied to the first phase, but passed to the second phase. Although some design constraints are applied during Phase I, it is important to consider every required design constraint during Phase II of the optimization process. The design constraints defined in Phase II should be sufficiently exhaustive to sustain the use case if the final design is expected to be ‘3D printed’ directly. For traditional structures, users usually go through a second stage where the topology concept is interpreted and then fine-tuned by size optimization with all design constraints included. The second phase of the lattice optimization process should be viewed as the fine-tuning stage for the design since further manual manipulation of a lattice structure with hundreds of thousands cell members is close to impossible.

In the first phase, the design domains are optimized similar to a regular topology optimization, except that intermediate density elements are retained in the model. As explained above, this theoretically may improve performance of the optimized structure for considerations other than compliance (for example, buckling) when compared to a regular topology optimization. The intermediate densities in the optimized structure are represented by user-defined lattice types (micro-structures). The volume fraction of the lattice structure corresponds to the element density at the end of the first phase. During the optimization process, stiffness of the intermediate densities corresponds to micro-structural homogenized properties. DefinitionThe first phase of the Lattice optimization process requires the inclusion of the LATTICE continuation line on all DTPL bulk data entries. This activates Lattice optimization, and the LB and UB fields can be used to specify the range of densities for elements that can be converted into Lattice elements. Elements with densities above UB remain as solid elements and those with densities lower than LB are removed from the model. The solid elements which lie within the LB and UB density bounds (intermediate densities) defined on the LATTICE continuation line are replaced by the corresponding Lattice Structures (Lattice Type – LT). The solid elements can be first or second order elements. The lattice structures are constructed using 1D Tapered Beam (CBEAM) elements, as shown below. The initial radius of the lattice structure beam elements for each lattice structure cell is proportional to the density of the intermediate density elements which were replaced, such that the initial volume in phase 2 is equal to that at the end of phase 1. In phase 2, the concept of lattice beam element radius is interpreted as joint thickness. Thickness for each joint at the conjunction of lattice beam elements is determined and the radius of each element can vary across the beam length. The beam elements have the property PBEAML and TYPE=ROD is automatically assigned for each element. The thickness of this tapered beam element can vary along its length and only circular cross-sections are available. The X(1)/XB field on the PBEAML entry is always set to 1.0 for tapered beam elements. For TYPE=ROD, if X(1)/XB is equal to 1.0, the DIM(1)A references the radius of the beam at end A and DIM(1)1 references the radius of the beam at end B. This element is a tapered beam formulation, and averaging is not used to determine the average radius of the beam. Instead, the true tapered beam formulation is used with the given dimensions. The true tapered beam formulation is only available for TYPE=ROD.

The LATSTR field on the LATTICE continuation line can be used to specify the stress constraint for the second phase (Stress Constraints). The lattice type can be controlled using the LT field on the LATTICE continuation line. The values LT = 1, 2, 3, or 4 can be used to control the Lattice structure which replaces the intermediate density elements. LT=3 and LT=4 are currently only applicable to Hexahedral elements. Below are the lattice types: LT = 1: Hexahedral elements (CHEXA)

LT = 2: Hexahedral elements (CHEXA)

For LT = 2, CHEXA elements, the floating grid points at the center of lattice structure faces facing the adjacent solid element at the Lattice-Solid Interface are connected using automatically generated Freeze CONTACT. The automatic creation of such CONTACT is to make certain that the center nodes on the faces connected to the solid elements are not left floating (disconnected) in the second phase. LT = 3: Hexahedral elements (CHEXA) |

| 1. | The lattice is based on newly created nodes only. Therefore, SPC’s and FORCE’s that existed in the original model and were applied to design domain will not be preserved and you will have to redefine them. |

| 2. | Contact interfaces with a minimum of one surface in the design domain will also not be preserved. OptiStruct internally creates new N2S contacts between the new nodes and solid elements. It is recommended to verify the newly generated contact interfaces to make certain the contact behavior is as expected. |

LT = 1 or LT = 2: Tetrahedral elements (CTETRA)

Pyramid elements (CPYRA)

Pentahedron elements (CPENTA)

If a Lattice Optimization solver deck is named “<name>.fem”, then at the end of the first phase a new file “<name>_lattice.fem” is generated. This new file includes the 1D element data which represents the new Lattice Structure and the sizing optimization set-up. You have to redefine optimization responses, constraints, and the objective function; additionally the contact sets may also have to be redefined (Contact).

The amount of intermediate densities in a model depends primarily on the penalty applied. This is similar to the penalty applied during a topology optimization. If the penalty is increased, the intermediate densities are pushed more towards either 0.0 or 1.0. This leads to a low number of elements with intermediate densities, which corresponds to a lower percentage of lattice structures (low porosity). If the penalty is reduced, the percentage of lattice structures, are higher (high porosity). The design parameter LATPRM, POROSITY can be used to control the porosity of the model by controlling the amount of intermediate densities in the model in the first stage of the Lattice Structure Optimization run (default = HIGH). HIGH This option generates a relatively high number of intermediate density elements in the first stage of the optimization run (high porosity). If this option is selected, no penalty is applied to the Young’s modulus to density relationship. This generally results in a high volume fraction of lattice zones in the final results of Phase 1. Note that this possibly leads to an over-estimation of stiffness performance for a particular model. This implies that the stiffness performance can drop significantly in Phase II. MED This option generates a relatively medium number of intermediate density elements in the first stage of the optimization run (medium porosity). If this option is selected, a reduced penalty of 1.25 is applied for a medium level of preference for lattice zone presence. Typically, this option leads to a reduced number of Lattice zones as compared to the option HIGH. LOW This option generates a relatively low number of intermediate density elements in the first stage of the optimization run (low porosity). If this option is selected, a natural penalty of 1.8 is applied, which would typically lead to a final design with mostly fully dense material distribution if a simple “stiffest structure” formulation (compliance minimization for a given target volume) is applied.

Figure 2: Difference between HIGH, MED, and LOW porosity options. |

During Phase 1, a modified topology optimization is carried out to generate a structure with a range of intermediate densities (0.0 to 1.0). The density of a topology element is correlated to its stiffness using the following equation:

Where, E is the optimum stiffness of the topology element for the density E0 is the stiffness of the initial design space material (actual material data)

P is the penalty applied to the density to control the generation of intermediate density elements In the real world, there is no physical material that can be used for elements with “intermediate densities” since this is a virtual concept. However, with the availability of Lattice Structures, you can approximate the behavior of these virtual “intermediate densities” using varying volume fractions (or densities) and types of interconnected Beam (CBEAM) elements for each topology element. However, based on the nature of these Lattice elements, they provide a reasonably accurate physical representation of the intermediate densities only when the volume fraction to stiffness relationship is as follows:

This is calculated by homogenizing the lattice structure within its unit volume and comparing its stiffness with the density-penalized stiffness of the modified topology optimization. Based on extensive testing, it has been observed that an optimized topology design cell is accurately represented by a lattice unit cell if the penalty is set to 1.8 in the initial modified topology optimization. In other words, the accuracy of the correlation between the physical lattice structures and virtual intermediate densities increases as it gets closer to a penalty (p) of 1.8. This is called a “natural” penalty and corresponds to POROSITY = LOW. However, in some cases, a more porous structure for considerations other than compliance performance may be required (for example buckling). Then, POROSITY = MED or HIGH can be used to set lower penalty (p) values leading to a higher number of virtual intermediate elements. The number of intermediate elements is typically highest in the case of HIGH (p=1.0 or no penalty) and lower in the case of MED (p=1.25). These intermediate density elements will be replaced with lattice structures which correlate to a higher compliance (as explained earlier, the best compliance performance is only achieved when the penalty is close to 1.8). Therefore, when MED or HIGH porosity options are used, it is important to make certain that all the critical constraints are included in Phase 2 as the structural and optimization responses may be overestimated (the difference is more pronounced when HIGH is used). Moreover, it is recommended to include these critical constraints in Phase 1 also; however, with possibly more conservative bounds when compared to Phase 2. The analysis of the <name>_lattice.fem file, which is generated at the end of Phase 1, provides a more accurate picture of the compliance performance as it contains the actual Lattice structures, instead of the virtual intermediate density elements (Figure 3).

Figure 3: Difference between LOW, MED, and HIGH porosity options with regard to stiffness performance. If Phase 1 constraints are important, then it is recommended to choose one or more of the following:

|

In the second phase, the Lattice Structure is optimized using Size optimization. The first phase represents isotropic material optimization since the Lattice micro-structural representation has isotropic (or near-isotropic) homogenized properties. The size optimization phase is aimed at incorporating some anisotropy to the Lattice Structure, thereby making the structure more efficient. For a given loading, the newly created file <name>_lattice.fem includes sizing optimization set-up. The optimization responses, constraints, and objective function should be reviewed and redefined (if necessary) in the second phase. The contact sets should also be reviewed and possibly redefined if required. A single design variable (DESVAR) is automatically created for each joint (conjunction of lattice tapered beam elements) representing the radius of all the beam cross sections at the joint. The DESVAR’s are related to the radii of each tapered beam element by DVPREL entries in the size optimization process. The lower bound of the sizing design variables are automatically set to a very low value (equal to Where, UB is the upper bound of the design variables for Lattice Sizing Optimization phase). This lower bound can be controlled using LATPRM,MINRAD and LATPRM,CLEAN. At the end of the second phase, a new <name>_lattice_optimized.fem file is created, which includes optimized lattice structures. Beams with very small radii are automatically removed from the structure during this phase (Beam Cleaning). Therefore, a verification analysis using the <name>_lattice_optimized.fem file is recommended to look at the final responses. The parameter LATPRM,TETSPLT,YES can be used to turn on the splitting of all non-design or solid elements to Tetrahedral elements for further usage in 3D printing software. |

During the phases of the Lattice Optimization run, the compliance of the model varies based on different internal processes. Reduction in compliance is generally undesirable and it is necessary to exercise caution and be aware of stages when performance drops occur.

|

In addition to the regular constraints, stress constraints can be used in lattice optimization. Stress constraints can be applied in two ways:

Stress NORM MethodThe Stress NORM method is used to approximately calculate the maximum value of the stresses of all the elements included in a particular response. This is also scaled with the stress bounds specified for each element. Therefore, to minimize the maximum stresses in a particular element set, the resulting stress NORM value (

Where,

n is the number of elements

p is a penalty (power) value (default = 6.0) The Penalty or Power value (p) can be modified using the parameter DOPTPRM, PNORM. The value p = 6.0 is the default and higher values of (p à ∞) increases the accuracy of the stress norm function ( The Stress NORM feature creates two responses for a model, one stress NORM response for the elements with highest 10% of the stresses and a second stress NORM response for the rest of the model. Therefore, there may be two Stress Responses in the Retained Responses table of the OUT file.

|

The Beam Cleaning procedure occurs at the end of the second optimization phase to remove beams with very low radii. Beam Cleaning can be controlled using LATPRM,CLEAN. The minimum value of the radii or aspect ratio can be controlled using LATPRM, MINRAD and/or LATPRM, R2LRATIO in conjunction with LATPRM,CLEAN. |

OSSmooth can be invoked to activate the Smoothing process after the first optimization stage (and before Lattice generation). This is activated using LATPRM, OSSRMSH. Optionally, Remeshing can also be performed by specifying the target Mesh Size on the parameter.

|

At the conclusion of Phase 1, some intermediate density elements are removed from the model prior to the generation of lattice structures. This is controlled using the LB field on the LATTICE continuation line. The Lattice Structure performance is generally sensitive to the value of the Lower Bound (LB) on the lattice continuation line. Small changes in the value of LB can lead to large compliance performance variations. For example, the compliance performance can decrease considerably for a small increase in the lower bound of density (retaining fewer CBEAM elements). LATPRM, LATLB can be used to improve performance in such cases; OptiStruct will then reanalyze the structure by assigning a very low stiffness value to the elements with density values below LB. The compliance performance of this reanalyzed structure is compared to the initial structure. If the percentage difference in performance is large, the LB value is decreased and the model is reanalyzed. This process is repeated until the difference in compliance performance falls below a certain threshold. Then the new LB value after the final iteration is used to generate the final lattice structure for Phase II. This process aims at generating a model that allows for maximum CBEAM element removal and at the same time retaining reasonable model compliance performance. If you do not specify a Lower Bound (LB) on the DTPL LATTICE continuation line, a default value of 0.1 is used for the reanalysis process. The parameter LATPRM, LATLB, AUTO can be used to turn ON the automated reanalysis and USER (default) turns OFF the reanalysis process (user specified LB is used). A third option CHECK can be used to run a single reanalysis iteration and it will output a warning which contains the percentage difference in compliance between the original and the reanalyzed structure. The CHECK option can be used to gain information about the compliance performance of the structure using the specified LB. If the compliance performance is not as expected, then consider rerunning Phase 1, using AUTO to possibly find a better density Lower Bound (LB). Automated Reanalysis is available only for optimization models with compliance or weighted compliance as objective. |

Note

| 1. | During Phase 2, Euler Buckling constraints are automatically applied to the Lattice Structure model. In theory, the column effective length factor in the Euler bucking calculation for the lattice beams, varies depending on the boundary conditions affecting a particular beam. The main boundary condition influencing the column effective length factor is the way the beam is attached at its ends. Typically, the factor varies from ideally hinged (1.0) to rigidly fixed (0.5). In OptiStruct, the column effective length factor is internally set equal to a value between the two. This value is set equal to the same value for all beams. Therefore, this implies that the resulting structure will be less conservative as compared to an ideally hinged model. If buckling performance is critical to the model, then you need to make sure the performance of the final structure is as expected. Additionally, the Buckling Safety Factor (LATPRM, BUCKSF) can be used to adjust the safety factor of the buckling load calculation. If you reset the internally created parameter LATPRM, LATTICE, YES is reset to NO, the Euler Buckling constraints are deactivated (not recommended). |

Limitations

| 1. | Global-Local Analysis, Multi-Model Optimization, Domain Decomposition Method, and 1D/3D Bolt Pretension Analysis are not supported in Lattice Optimization. |

| 2. | Shape, Free-size, Equivalent Static Load (ESL), Topography, and Level-set Topology optimizations are not supported in conjunction with Lattice Optimization. |

| 3. | Heat-Transfer Analysis and Fluid-Structure Interaction are not supported. |