Multi-Model Optimization |

|

|

|

|

|

Multi-Model Optimization |

|

|

|

|

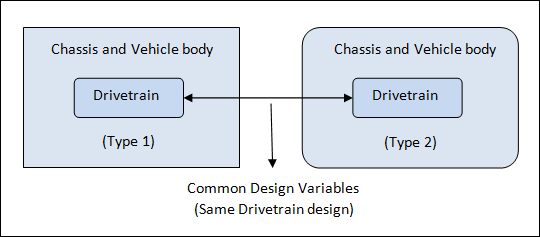

Multi-Model Optimization (MMO) is available for optimization of multiple structures with common design variables in a single optimization run.

ASSIGN, MMO can be used to include multiple solver decks in a single run. Common design variables are identified by common user identification numbers in multiple models. Design variables with identical user identification numbers are linked across the models. Responses in multiple models can be referenced via the DRESPM continuation lines on DRESP2 and DRESP3 entries. Common responses in different models can be qualified by using the name of the model on the DRESPM continuation line. The model names can be specified via ASSIGN, MMO for each model.

Figure 3: Example usecase for Multi-Model Optimization

Multi-Model Optimization is a MPI-based parallelization method, requiring OptiStruct MPI executables for it to run. Existing solver decks do not need any additional input, can be easily included, and are fully compatible with the MMO mode. MMO allows greater flexibility to optimize components across structures. The –mmo run option can be used to activate Multi-Model Optimization in OptiStruct.

| 1. | All optimization types are currently supported. |

| 2. | Multi-body Dynamics (OS-MBD) and Geometric Nonlinear Analysis (RADIOSS Integration) are currently not supported. |

| 3. | MMO currently cannot be used in conjunction with the Domain Decomposition Method (DDM). |

| 4. | The DTPG and DSHAPE entries are supported; however linking of design variables is not. For example, it makes no difference to the solution if multiple DSHAPE entries in different slave files contain the same ID’s or not. |

Note:

| 1. | The number of processes should be equal to one more than the number of models. |

| 2. | Refer to Launching MMO for information on launching Multi-Model Optimization in OptiStruct. |

| 3. | If multiple objective functions are defined across different models in the master/slaves, then OptiStruct always uses minmax or maxmin [Objective(i)] (where, i is the number of objective functions) to define the overall objective for the solution. The reference value for DOBJREF in minmax/maxmin is automatically set according to the results at initial iteration. |

| 4. | The following entries are allowed in the Master deck: |

Control cards:

SCREEN, DIAG/OSDIAG, DEBUG/OSDEBUG, TITLE, ASSIGN, RESPRINT, DESOBJ, DESGLB, REPGLB, MINMAX, MAXMIN, ANALYSIS, and LOADLIB

Bulk data cards:

DSCREEN, DOPTPRM (see section below), DRESP2, DRESP3, DOBJREF, DCONSTR, DCONADD, DREPORT, DREPADD, DEQATN, DTABLE, and PARAM

DOPTPRM parameters (these work from within the master deck – all other DOPTPRM’s should be specified in the slave):

CHECKER, DDVOPT, DELSHP, DELSIZ, DELTOP, DESMAX, DISCRETE, OBJTOL, OPTMETH, and SHAPEOPT

There are several ways to launch parallel programs with OptiStruct SPMD. Remember to propagate environment variables when launching OptiStruct SPMD, if needed. Refer to the respective MPI vendor’s manual for more details. Starting from OptiStruct 14.0, commonly used MPI runtime software are automatically included as a part of the HyperWorks installation. The various MPI installations are located at $ALTAIR_HOME/mpi.

|

See Also: